UK Based AI Adoption Roadmap for FP&A

Why Governance Must Lead Capability

As we have discussed in previous blog posts on this topic, AI is now embedded in most FP&A planning platforms. Forecasting engines, scenario modelling, and generative commentary are no longer optional add-ons - they are increasingly standard.

Yet across the UK, adoption remains cautious and uneven. This is often misinterpreted as conservatism or slow decision-making. In reality, it reflects a deeper truth: AI adoption in UK finance is fundamentally a governance challenge, not a technology one.

For UK CFOs and FP&A leaders, the question is not whether AI can improve planning. It is whether it can be adopted without weakening accountability, auditability, or trust.

A successful UK approach therefore requires a deliberate adoption roadmap - one that establishes control before capability, and insight before automation.

Why a UK-specific AI roadmap matters for FP&A

Many AI adoption playbooks assume:

• High tolerance for experimentation

• Comfort with opaque models

• Rapid iteration with limited upfront governance

These assumptions are poorly aligned with the UK finance environment. Here, forecasts must be defendable, assumptions traceable, and ownership explicit. Boards and audit committees expect prudence and consistency, not algorithmic novelty.

A UK AI adoption roadmap for FP&A therefore needs to:

• Start with governance, not pilots

• Build trust incrementally

• Preserve human judgement at every stage

Here's what a phased approach might look like:

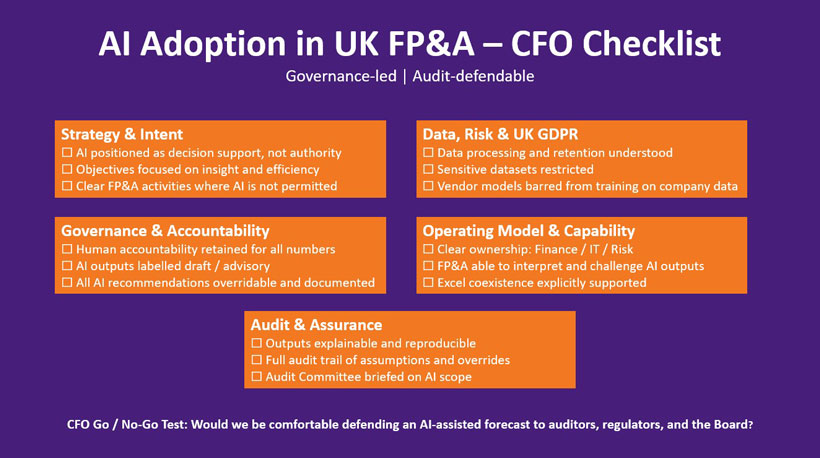

Phase 1: Control first & establish the operating envelope

The first phase of AI adoption in UK FP&A has little to do with algorithms.

Its primary objective is to eliminate unmanaged risk.

Before enabling AI capabilities in planning software, CFOs & Finance Teams must be able to answer basic but critical questions:

• Where is AI permitted in FP&A?

• Where is it explicitly prohibited?

• Who is accountable for outputs?

This phase typically involves:

• Defining acceptable AI use in FP&A (draft vs final outputs)

• Confirming data residency, vendor controls, and UK GDPR alignment

• Establishing clear ownership across Finance, IT, and Risk/Audit

• Briefing the Audit Committee on scope and safeguards

While this phase delivers little visible innovation, it is foundational. Without it, AI adoption tends to fragment into shadow usage, inconsistent practices, and growing compliance exposure.

Phase 2: AI-assisted efficiency - value without judgement risk

Once control is established, UK organisations typically move to low-risk efficiency gains.

At this stage, AI is used to reduce effort, not to influence decisions. Common applications include:

• Drafting variance explanations

• Structuring management commentary

• Identifying data anomalies

• Documenting models and assumptions

Crucially, AI outputs are:

• Clearly labelled as draft

• Subject to human review and approval

• Prevented from updating forecasts or plans directly

This phase is often where AI earns its first real credibility in UK FP&A. It shortens cycles, reduces manual effort, and improves consistency - without touching numbers or assumptions.

Trust is built by demonstrating that AI can save time without changing outcomes.

Phase 3: AI-augmented planning - insight with accountability

The third phase is where AI begins to support planning insight, rather than just efficiency.

Here, AI is introduced into activities such as:

• Scenario generation

• Sensitivity analysis

• Identification of potential forecast drivers

• Highlighting emerging risks and opportunities

Importantly, AI does not set assumptions or select drivers. It proposes while management decides.

To be acceptable in a UK context, this phase requires:

• Clear separation between management views and AI insights

• Assumption logs and version control

• Transparent override mechanisms

When implemented well, AI at this stage improves the quality of discussion in forecast reviews and scenario debates, while remaining fully explainable and open to challenge.

Phase 4: AI-guided forecasting - recommendations, not decisions

More advanced UK adopters may progress to AI-guided forecasting, but always with caution.

At this stage, AI may:

• Recommend forecast adjustments

• Flag early warning indicators

• Apply probability weighting to scenarios

However, several safeguards are non-negotiable:

• Approval workflows for any AI recommendation

• Threshold-based alerts

• Visibility into model logic and changes

• Regular model performance reviews

A critical UK constraint applies here: models cannot learn or adapt invisibly. Any change in behaviour must be reviewed and defendable.

AI may influence where management attention is focused, but it does not determine final numbers.

What this roadmap deliberately avoids

To remain aligned with UK governance expectations, this roadmap explicitly avoids:

• Autonomous forecast updates

• AI-generated board numbers

• Black-box decision-making

• Transfer of accountability away from management

These approaches may be technologically feasible, but they remain incompatible with the UK’s audit, regulatory, and board culture.

The UK advantage: deliberate adoption scales better

UK organisations often move more slowly through these phases than their international peers. But this pace brings an advantage.

By embedding AI within established planning logic, designing governance alongside capability, and reinforcing human accountability, UK FP&A teams tend to achieve more durable adoption.

AI becomes trusted not because it is impressive, but because it is controllable, explainable, and defendable.

Conclusion

AI will play an increasingly important role in FP&A planning. But in the UK, success will not be defined by how much judgement is automated.

It will be defined by how confidently AI-assisted outcomes can be explained to auditors, to boards, and to the business in general.

For UK CFOs and FP&A leaders, the most effective AI roadmap is one that moves deliberately from control, to efficiency, to insight, while ensuring that accountability never leaves human hands.

Recent

FP&A That Actually Drives Decisions: A Practical Playbook for Modern Finance Teams

one month ago

FP&A, Finance Automation