A UK AI Maturity Model for FP&A Planning Software

As AI capabilities rapidly proliferate across FP&A planning software, finance leaders face a familiar challenge: separating genuine value from hype.

As we observed in our previous blog post, in the UK, this challenge is amplified by a finance culture that places a premium on governance, auditability, and management accountability. So, while many vendors promote visions of autonomous forecasting and self-learning finance functions, UK CFOs and FP&A leaders are asking a more fundamental question:

How do we adopt AI in planning without undermining control, credibility, or trust?

At InfoCat, we believe that the answer lies not in adopting AI faster, but in adopting it deliberately. A UK-appropriate AI maturity model reflects how AI actually scales in finance environments where explainability matters as much as accuracy. In this article, we’ll cover:

- Why A UK-Specific FP&A AI Maturity Model Is Needed

- How to Assess Your FP&A AI Maturity

- What This Model Deliberately Avoids

- Conclusion

Why A UK-Specific FP&A AI Maturity Model Is Needed

Most AI maturity models originate in the US and implicitly assume:

- Higher tolerance for experimentation

- Greater comfort with black-box models

- Faster decision cycles with looser governance

These assumptions do not translate cleanly to the UK. Here, forecasts must withstand auditor scrutiny, and planning assumptions are closely tied to management judgement. Also, with boards expecting clear accountability for outcomes, a UK AI maturity model for FP&A therefore prioritises:

- Transparency over automation

- Governance over novelty

- Human judgement over model authority

How to Assess Your FP&A AI Maturity

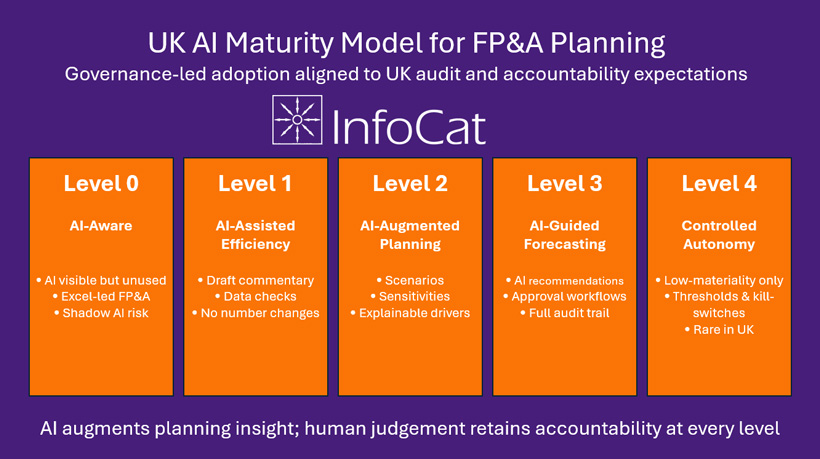

Assessing your FP&A AI Maturity requires a structured approach which takes into account your 'governance-led' adoption model and which is in turn carefully aligned to UK audit and accountability expectations. Then you can make that assessment by considering these five levels:

Level 0: AI-aware, not AI-enabled

At this stage, AI is visible but not operational within FP&A planning, so your planning processes are likely to remain:

- Excel-led or Excel-centric

- Supported by planning tools mainly for consolidation and workflow

Additionally, AI capabilities may exist within software platforms, but are either disabled or restricted and used only in ad-hoc, off-system experimentation.

The key risk at this level is not inaction, but uncontrolled experimentation—where analysts use AI tools outside governed systems, creating data and compliance exposure.

Success at Level 0 is defined by clarity: CFOs explicitly articulate where AI is allowed, where it is not, and who owns the risk.

Level 1: AI-assisted efficiency

This is where most UK FP&A teams should begin. At Level 1, AI is used to improve efficiency, not to influence financial judgement and typical use cases include:

- Draft variance explanations

- Commentary structuring

- Data quality checks and anomaly flagging

- Documentation of models and assumptions

Crucially at this level, AI outputs are:

- Clearly labelled as draft

- Subject to human approval

- Prevented from directly updating forecasts or plans

This level works well in the UK because it delivers visible productivity gains without altering accountability. Numbers remain unchanged and judgement remains human. Trust is built by showing that AI saves time without changing decisions.

Level 2: AI-augmented planning

Level 2 represents the UK FP&A ‘sweet spot’ for AI value. Here, AI begins to support planning insight, but only within explainable, driver-led frameworks. Common applications include:

- Sensitivity analysis generation

- Scenario ideation

- Identification of potential forecast drivers

Confidence ranges around forecasts, but importantly, AI does not select drivers or set assumptions. It suggests, while management decides. Governance therefore becomes more formal at this stage, with:

- Assumption logs

- Version-controlled scenarios

- Clear distinction between management views and AI insights

At Level 2, FP&A AI actively improves the quality of discussion in forecast reviews while remaining fully challengeable. This aligns closely with UK expectations of professional scepticism.

Level 3: AI-guided forecasting

At Level 3, the FP&A AI begins to recommend changes to forecasts but never finalises them and at this stage some typical capabilities may include:

- AI-proposed forecast adjustments

- Early-warning indicators

- Probability-weighted scenarios

- Cross-driver impact analysis

However, this level requires significantly stronger controls such as:

- Approval workflows

- Threshold-based alerts

- Transparent model logic

- Regular performance reviews

A key UK-specific constraint emerges here. Silent learning models are rarely acceptable and changes in model behaviour must be visible and reviewable.

FP&A AI at this stage is trusted enough to inform management attention, but not to replace management judgement.

Level 4: Controlled autonomy (selective and rare)

Fully autonomous AI remains uncommon in UK FP&A, and for good reason, as where it does exist, it is typically:

- Limited to low-materiality areas

- Applied to short-term operational forecasts

- Constrained by strict thresholds and kill-switches

Examples include:

- Volume or utilisation forecasting

- Demand signals feeding into FP&A

- Non-material cost projections

Even here, autonomy is conditional and closely monitored. End-to-end autonomous FP&A planning remains misaligned with UK governance norms.

What This Model Deliberately Avoids

This maturity model intentionally does not assume:

- AI-generated board numbers

- Autonomous budget or forecast updates

- Black-box decision-making

- Replacement of FP&A judgement

While these concepts may be technologically feasible, they remain culturally and institutionally incompatible with UK expectations of accountability and as noted in our previous blog post, the UK advantage is specifically slower, but stronger adoption, with UK organisations often progressing through these levels more slowly than their US peers.

However, that pace brings an advantage. By embedding AI within existing planning logic, designing governance alongside capability, and preserving clear ownership of decisions, UK FP&A teams tend to achieve more sustainable adoption. AI in FP&A becomes trusted not because it is impressive, but because it is understandable.

Conclusion

The future of AI in UK FP&A planning is not autonomous finance. It is augmented finance, where technology enhances insight, accelerates analysis, and strengthens challenge. Reassuringly, human judgement remains firmly in control.

UK CFOs and FP&A leaders operate in an environment where audit defensibility, transparency, and management accountability are non-negotiable. As a result, the question is not how quickly AI can be deployed, but how confidently its outputs can be explained, challenged, and governed.

For UK CFOs and FP&A leaders, maturity is not measured by how much AI is deployed, but by how confidently it can be defended.

Find out more about AI adoption in Finance. Join our webinar AI in FP&A: From Hype to Reality on 17th February 2026.

Recent

FP&A That Actually Drives Decisions: A Practical Playbook for Modern Finance Teams

2 months ago

FP&A, Finance Automation